Context Engineering Is the Whole Game Now (And Why Naive RAG Had to Die)

If the model is the brain, the context system is the working memory. As models grow and evolve, the working-memory problem is getting harder, not easier. So what does your context pipeline actually look like?

Published by

Blake Martz

on

Nov 25, 2025

Yesterday Anthropic released Claude Opus 4.5: the first model to break 80% on SWE-Bench, with automatic context compression and a new "effort parameter" that lets you dial reasoning depth per call. Last week Google finally dropped Gemini 3 Pro: 1 million token context window and 1501 Elo on LMArena, the first model to crack 1500 on the chatbot arena leaderboard.

Two very different bets on the same problem.

Anthropic says: make the model smarter about what it keeps. Google says: just make the model smarter and give it more room. Both are right that context is the bottleneck. But neither of them think you should just dump everything in and hope for the best.

"Does this mean RAG is really dead?"

If you've built production retrieval systems, on-prem RAG stacks and enterprise knowledge bases, then you already know the answer: the naive version of RAG is long dead. "Semantic search, top-k and pray" is fine for proof of concepts... but for production it's not nearly enough.

What has replaced "RAG" is context engineering. And bigger context windows don't reduce the need for it, they make it more important.

https://x.com/peterjliu/status/1982099832249712750

Naive RAG Never Actually Worked

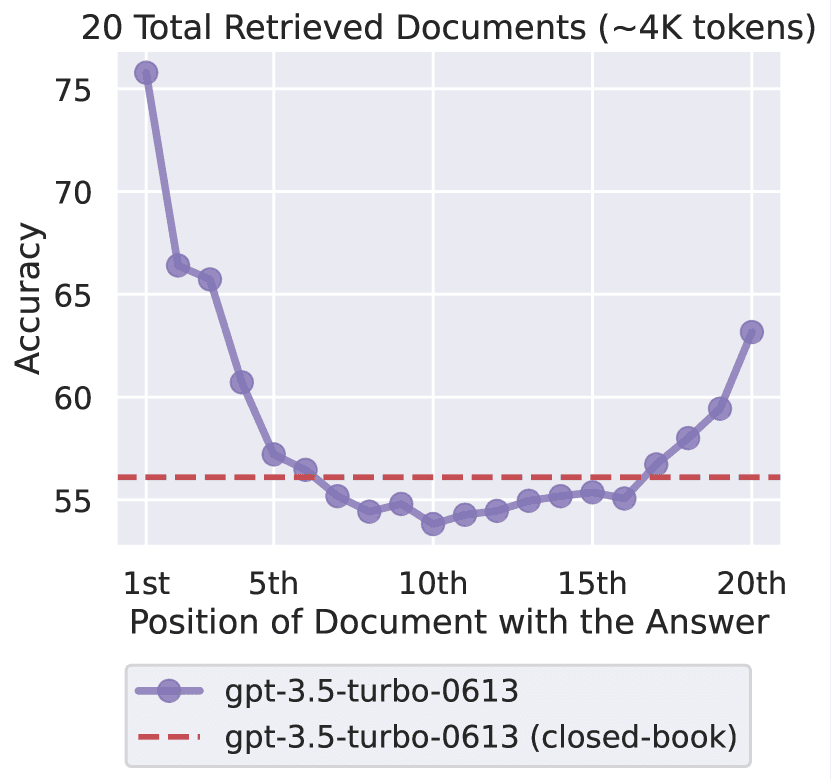

Everyone has had the moment where you're staring at a model that you know has the right context, and it gets the answer wrong anyway. You gave it everything it needed and it ignored the most important part right in the middle. The Lost in the Middle paper (2023) proved an important point that explains what is happening here: models have attention spans like us! They are bad at using the middle of very long contexts.

When too much information gets dumped in indiscriminately, you aren't helping the model. More is not better. You are burying it alive. The instinct with huge context windows is to dump in everything. But more context ≠ more accuracy. Nine times out of ten it's nothing but more noise.

https://arxiv.org/pdf/2307.03172

Semantic Search Isn’t Enough

The biggest myth of the RAG boom was that semantic search was ever enough. Embed everything, dump it in a vector database, nearest-neighbor cosine similarity, and you're done, right?

Anyone who's dealt with real world problems in technical domains (legal, financial, etc) or highly technical docs of any kind, knows how brittle pure semantic search is. It misses exact strings and identifiers. It gets confused by jargon and acronyms. It retrieves things that may seem similar but are irrelevant. It actually loses to keyword search in most scenarios that require precision.

Hybrid retrieval (keyword search + semantic search + metadata filtering) consistently beats pure semantic similarity on both precision and recall. If you're only doing semantic search today, you are already behind.

The Bottleneck Was Never the Model

This is what I've seen firsthand building retrieval systems: the models are not the limiting factor anymore. The context is.

Specifically: how you select context, how you structure it, how you insert it, and how you keep it clean over time.

Agents don't derail because the model suddenly becomes stupid, they derail because their context becomes polluted. The agent is carrying around irrelevant scraps. The retrieval pipeline keeps injecting duplicates. The conversation grows into a landfill of stale distractions.

This is context rot. Once it sets in, no model is going to save you. Not Gemini 3 with a million tokens. Not Opus 4.5 with its compression techniques.

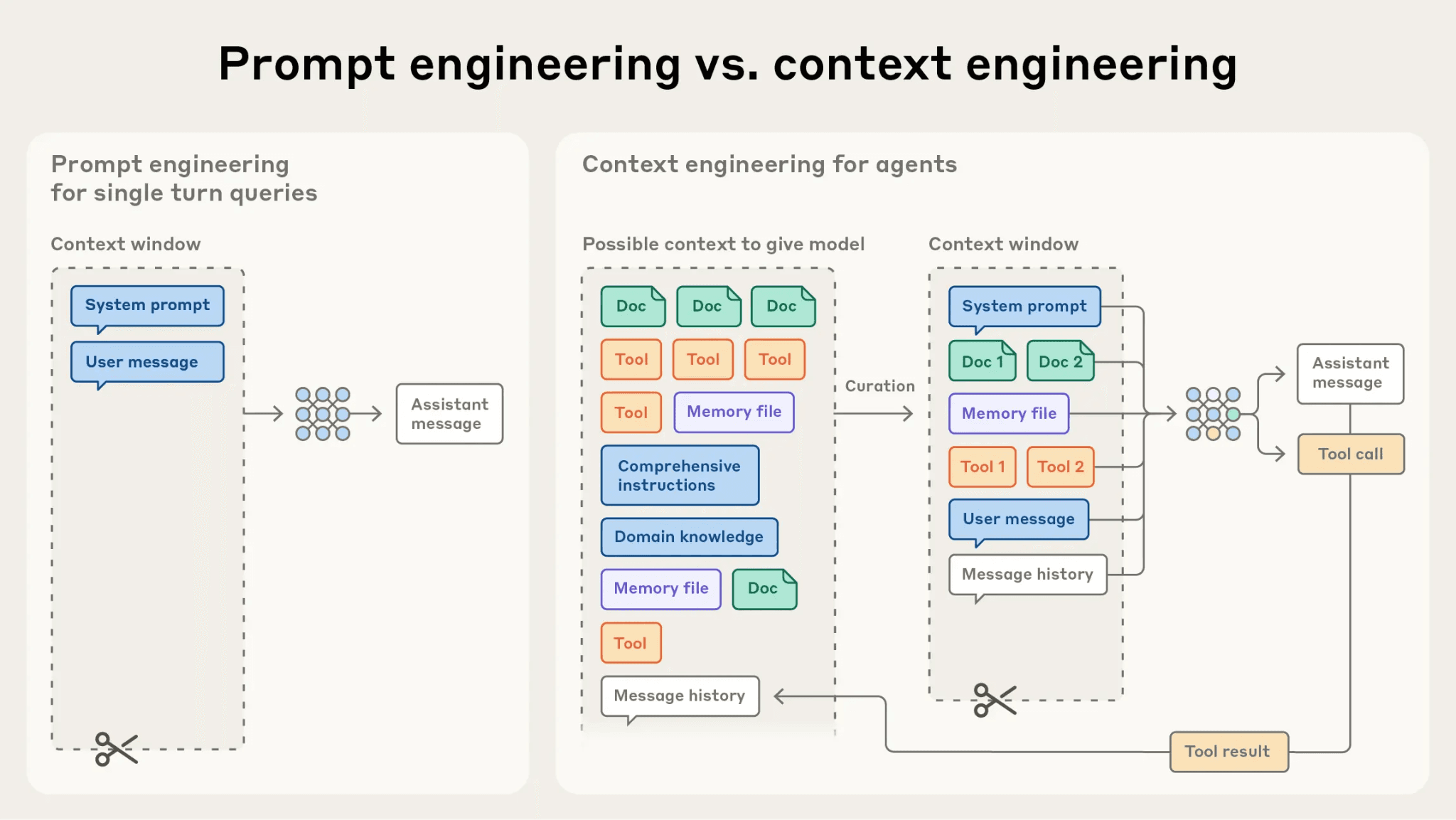

What Context Engineering Actually Means

Context engineering is the art of giving a model everything it needs and nothing that it doesn't. Continuously, dynamically, and with intention.

It's not prompt engineering. It's not "just better chunking." It includes hybrid retrieval, dynamic context pruning, selective injection, memory management, agentic search, context placement, relevance scoring beyond embeddings, and increasingly: agentic retrieval orchestration.

Larger context windows don't eliminate the need for this. They spotlight it. When you can feed a model a million tokens, the question isn't "Can I?" The question is "Should I?" Which million? For how long? How do I keep them from degrading reasoning over time?

This is exactly why Opus 4.5's approach is interesting — instead of just expanding the window, they built automatic summarization that compresses earlier context as needed. That's context engineering baked into the model itself. But you still need to do it at the application layer too.

https://www.anthropic.com/engineering/effective-context-engineering-for-ai-agents

Agentic Retrieval Is Next

The next wave is retrieval that's not static but agentic. The model decides when it needs more information. It chooses which retrieval tool to use. It can refine, correct, or repeat searches. It can prune irrelevant context. It can rewrite its own working memory. It adapts dynamically as the conversation evolves. This isn't RAG anymore. Naive RAG is dead. Long live context engineering!

Two Launches, Same Lesson

Gemini 3 Pro at $2/$12 per million tokens with a million-token window. Opus 4.5 at $5/$25 with 200K and intelligent memory management. Both shipped within a week of each other. Both claim state-of-the-art on their respective benchmarks.

The pricing and the features tell you where this is going. Context is expensive. Context management is the differentiator. The teams that figure out how to give models the right context, everything they need, nothing that they don't, are building systems that actually solve real world problems.

What has emerged is a discipline that sits between retrieval, memory, agents, orchestration, and reasoning.

If the model is the brain, the context system is the working memory. And as models grow and evolve, the working-memory problem is getting harder, not easier. So what does your context pipeline actually look like?